Hiding in Plain Sight

Nov 11, 2021 | samczsun

I like challenging assumptions.

I like trying to do the impossible, finding what others have missed, and blowing people's minds with things they never saw coming. Last year, I wrote a challenge for Paradigm CTF 2021 based on a very obscure Solidity bug. While one variation had been publicly disclosed, the vulnerability I exploited had never really been discussed. As a result, almost everyone who tried the challenge was stumped by the seemingly impossible nature of it.

A few weeks ago, we were discussing plans around Paradigm CTF 2022 when Georgios tweeted out a teaser tweet. I thought it would be incredibly cool to drop a teaser challenge on the same day as the kickoff call. However, it couldn't just be any old teaser challenge. I wanted something out of this world, something that no one would see coming, something that pushed the limits of what people could even imagine. I wanted to write the first Ethereum CTF challenge that exploited an 0day.

🟩 🏁 @paradigm_ctf 👀👀👀

— Georgios Konstantopoulos (@gakonst) October 12, 2021

w/ @samczsun @TylerCrimm @AmanGotchu @williamLberman pic.twitter.com/QCMCYBHNbs

How It's Made: 0days

As security researchers, there are a few base assumptions we make in order to optimize our time. One is that the source code we're reading really did produce the contract we're analyzing. Of course, this assumption only holds if we're reading the source code from somewhere trusted, like Etherscan. Therefore, if I could figure out a way to have Etherscan verify something incorrectly, I would be able to design a really devious puzzle around it.

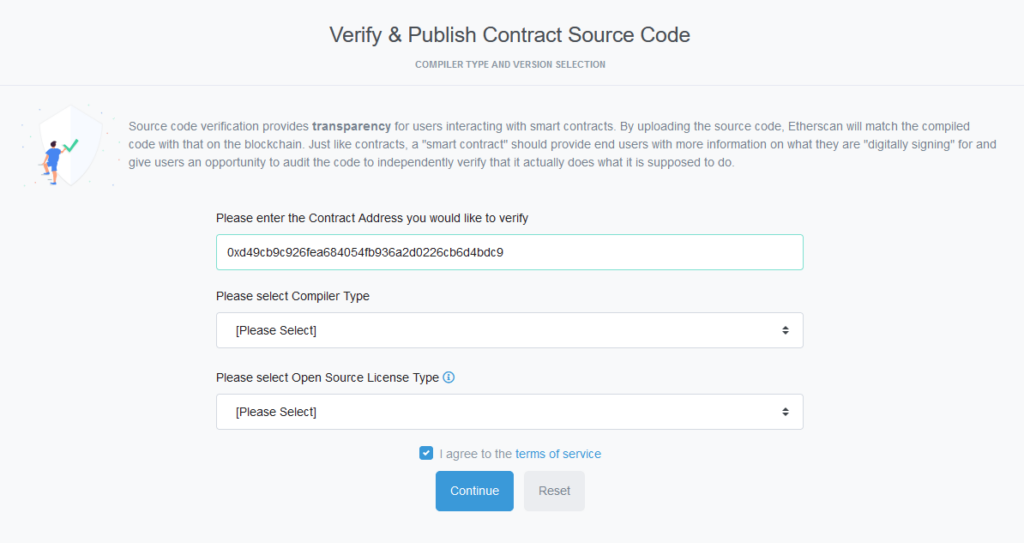

In order to figure out how to exploit Etherscan's contract verification system, I had to verify some contracts. I deployed a few contracts to Ropsten to play around with and tried verifying them. Immediately, I was greeted with the following screen.

I selected the correct settings and moved onto the next screen. Here, I was asked to provide my contract source code.

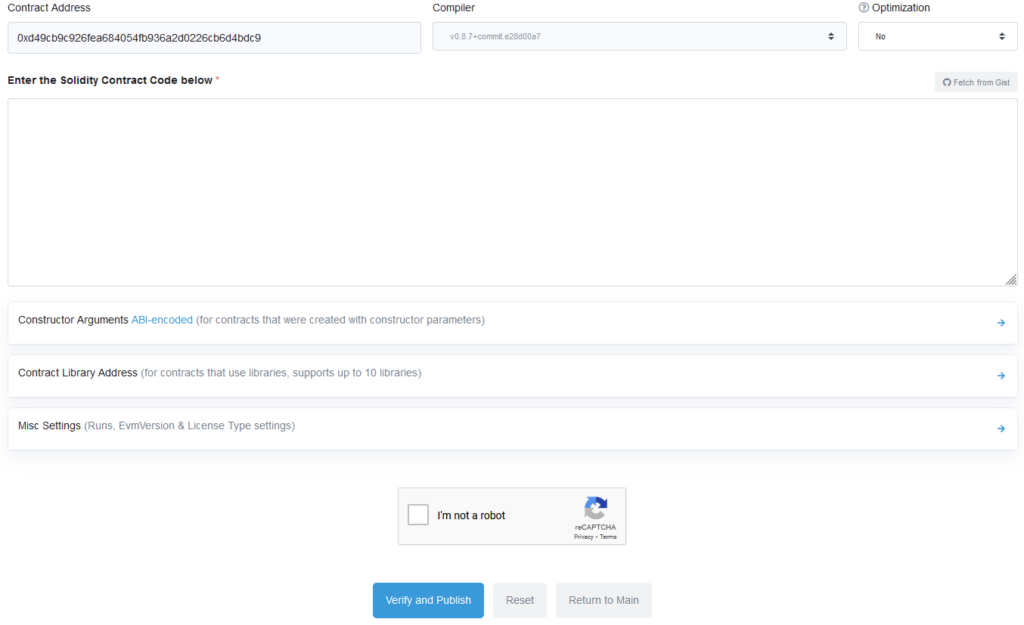

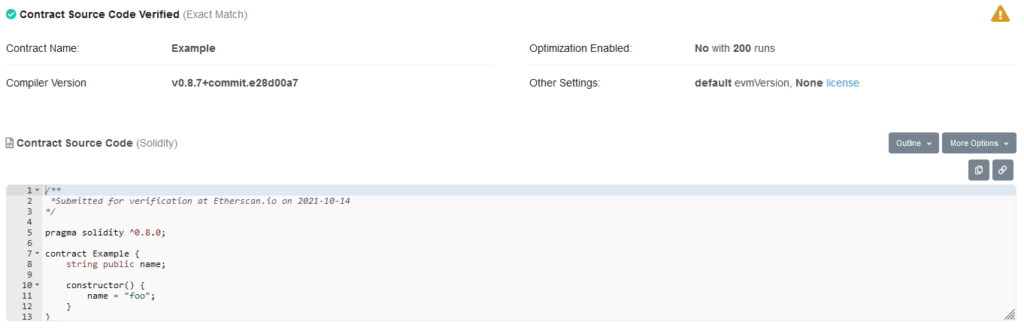

I put in the source code and clicked verify. Sure enough, my source code was now attached to my contract.

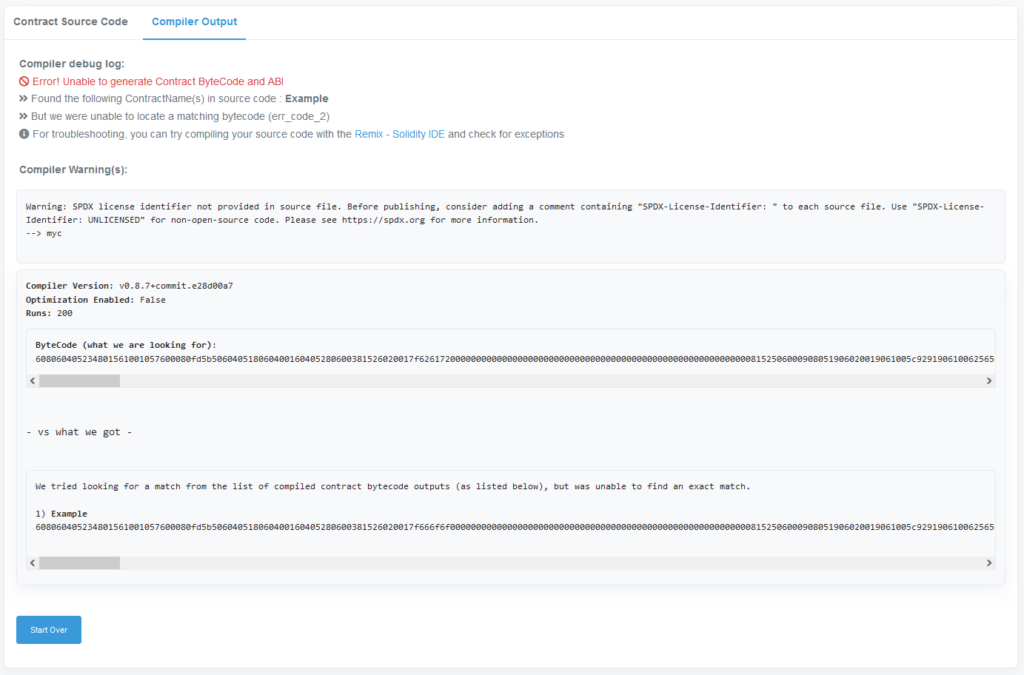

Now that I knew how things worked, I could start playing around with the verification process. The first thing I tried was deploying a new contract with foo changed to bar and verifying that contract with the original source code. Unsurprisingly, Etherscan refused to verify my contract.

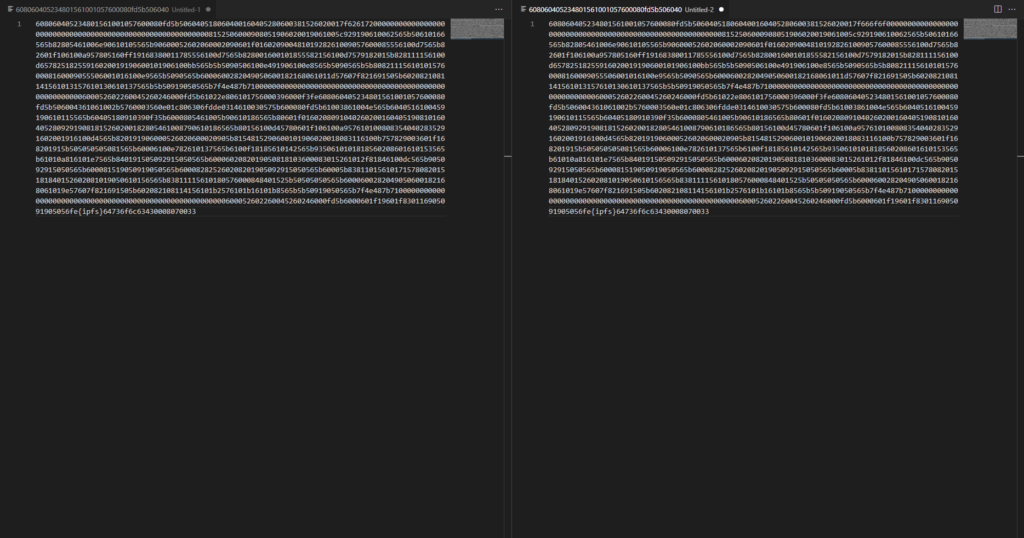

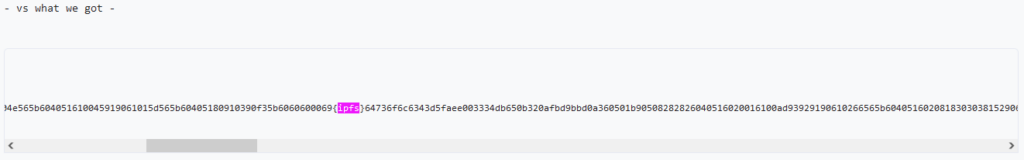

However, when I manually compared the two bytecode outputs, I noticed something strange. Contract bytecode is supposed to be hex, but there was clearly some non-hex in there.

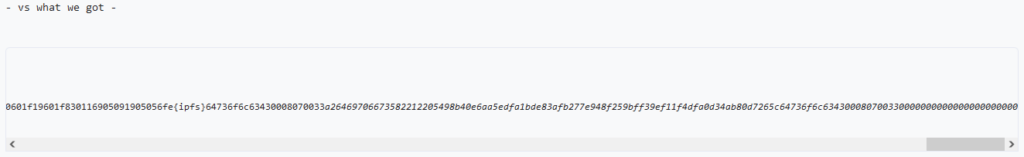

I knew that Solidity appended contract metadata to the deployed bytecode, but I never really considered how it affects contract verification. Clearly, Etherscan was scanning through the bytecode for the metadata and then replacing it with a marker that said, "Anything in this region is allowed to be different, and we'll still consider it the same bytecode."

This seemed like a promising lead for a potential 0day. If I could trick Etherscan into interpreting non-metadata as metadata, then I would be able to tweak my deployed bytecode in the region marked {ipfs} while still having it verify as the legitimate bytecode.

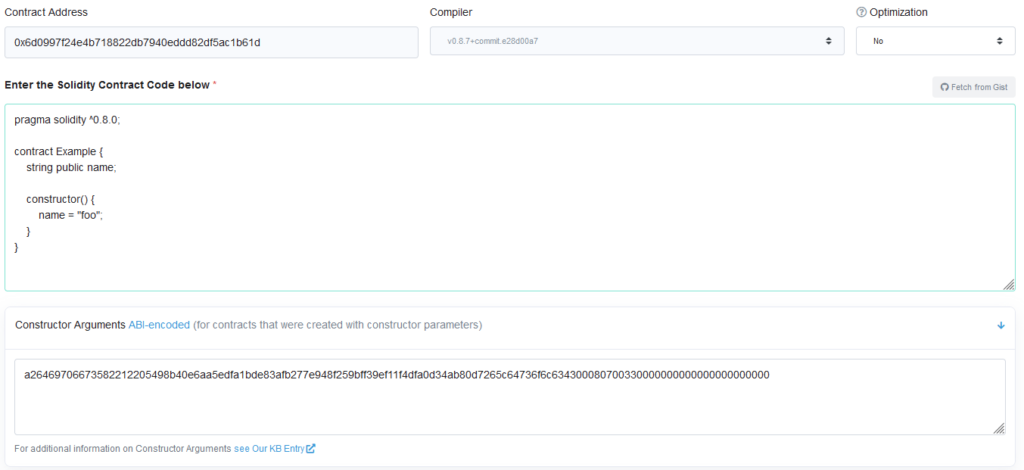

The easiest way I could think of to include some arbitrary bytecode in the creation transaction was to encode them as constructor arguments. Solidity encodes constructor arguments by appending their ABI-encoded forms directly onto the create transaction data.

However, Etherscan was too smart, and excluded the constructor arguments from any sort of metadata sniffing. You can see that the constructor arguments are italicized, to indicate that they're separate from the code itself.

This meant I would need to somehow trick the Solidity compiler into emitting a sequence of bytes that I controlled, so I could make it resemble the embedded metadata. However, this seemed like a nightmare of a problem to solve, since I would have almost no control over the opcodes or bytes that Solidity chooses to use without some serious compiler wrangling, after which the source code would look extremely suspicious.

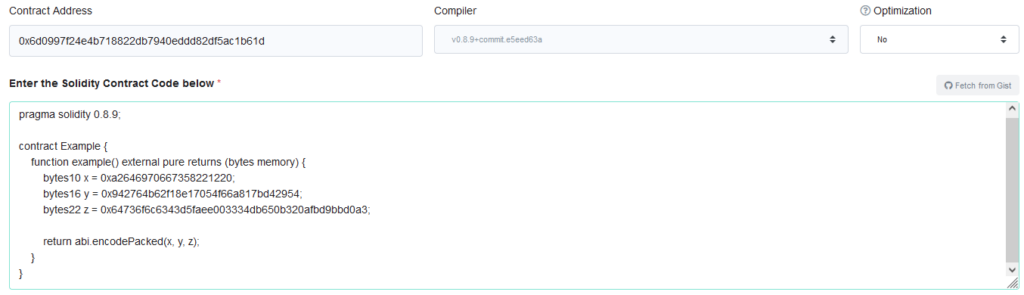

I considered this problem for a while, until it hit me: it was actually extremely easy to cause Solidity to emit (almost) arbitrary bytes. The following code will cause Solidity to emit 32 bytes of 0xAA.

bytes32 value = 0xaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa;

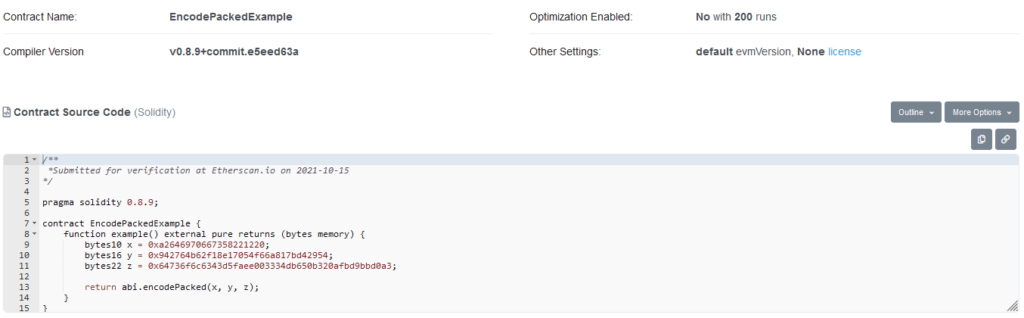

Motivated, I quickly wrote a small contract which would push a series of constants in such a way that Solidity would emit bytecode which exactly resembled the embedded metadata.

To my delight, Etherscan marked the presence of an IPFS hash in the middle of my contract, where no embedded metadata should ever be found.

I quickly copied the expected bytecode and replaced the IPFS hash with some random bytes, then deployed the resulting contract. Sure enough, Etherscan considered the differing bytes business as usual, and allowed my contract to be verified.

With this contract, the source code suggests that a simple bytes object should be returned when calling example(). However, if you actually try to call it, this happens.

$ seth call 0x3cd2138cabfb03c8ed9687561a0ef5c9a153923f 'example()'

seth-rpc: {"id":1,"jsonrpc":"2.0","method":"eth_call","params":[{"data":"0x54353f2f","to":"0x3CD2138CAbfB03c8eD9687561a0ef5C9a153923f"},"latest"]}

seth-rpc: error: code -32000

seth-rpc: error: message stack underflow (5 <=> 16)

I had successfully discovered an 0day within Etherscan, and now I could verify contracts which behaved completely differently from what the source code suggested. Now I just needed to design a puzzle around it.

A false start

Clearly, the puzzle would revolve around the idea that the source code as seen on Etherscan was not how the contract would actually behave. I also wanted to make sure that players couldn't simply replay transactions directly, so the solution had to be unique per-address. The best way to do this was obviously to require a signature.

But in what context would players be required to sign some data? My first design was a simple puzzle with a single public function. Players would call the function with a few inputs, sign the data to prove they came up with the solution, and if the inputs passed all the various checks then they would be marked as a solver. However, as I fleshed out this design over the next few hours, I quickly grew dissatisfied with how things were turning out. It was starting to become very clunky and inelegant, and I couldn't bear the idea of burning such an awesome 0day on a such a poorly designed puzzle.

Resigning myself to the fact that I wouldn't be able to finish this in time for Friday, I decided to sleep on it.

Pinball

I continued trying to iterate on my initial design over the weekend, but made no more progress. It was like I'd hit a wall with my current approach, and even though I didn't want to admit it, I knew that I'd likely have to start over if I wanted something I'd be satisfied with.

Eventually, I found myself reexamining the problem from first principles. What I wanted was a puzzle where players had to complete a knowledge check of sorts. However, there was no requirement that completing the knowledge check itself was the win condition. Rather, it could be one of many paths that the player is allowed to take. Perhaps players could rack up points throughout the puzzle, with the exploit providing some sort of bonus. The win condition would simply be the highest score, therefore indirectly encouraging use of the exploit.

I thought back to a challenge I designed last year, Lockbox, which forced players to construct a single blob of data which would meet requirements imposed by six different contracts. The contracts would apply different constraints on the same bytes, forcing players to be clever in how they constructed their payload. I realized I wanted to do something similar here, where I would require players to submit a single blob of data and I would award points based on certain sections of data meeting specific requirements.

It was at this point that I realized I was basically describing pinboooll, a challenge I worked on during the finals of DEFCON CTF 2020. The gimmick with pinboooll was that when you executed the binary, execution would bounce around the control flow graph similar to how a ball bounces around in a pinball machine. By constructing the input correctly, you would be able to hit specific sections of code and rack up points. Of course, there was an exploit involved as well, but frankly speaking I'd already forgotten what it was and I had no intention of trying to find it again. Besides, I already had my own exploit I wanted to use.

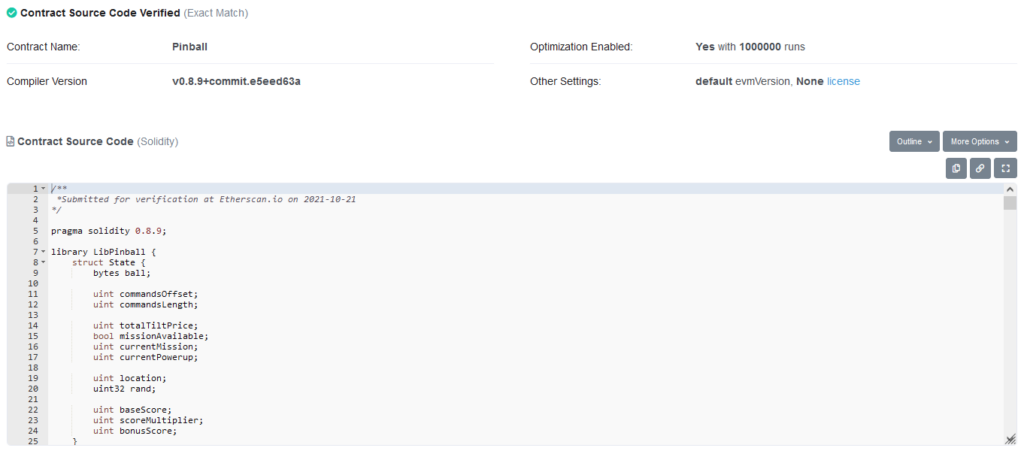

Since I was handling a live 0day here, I decided that I wanted to get the puzzle out as soon as possible, even if it meant compromising on how much of someone else's work I'd be copying. In the end, I spent a few hours refreshing myself on how pinboooll worked and a few days re-implementing it in Solidity. This took care of the scaffolding of the puzzle, now I just had to integrate the exploit.

Weaponizing an 0day

My approach to getting Solidity to output the right bytes had always been to just load several constants and have Solidity emit the corresponding PUSH instructions. However, such arbitrary constants would likely be a huge red flag and I wanted something that would blend in slightly better. I also had to load all the constants in a row, which would be hard to explain in actual code.

Because I really only needed to hardcode two sequences of magic bytes (0xa264...1220 and 0x6473...0033) I decided to see if I could sandwich code between them, instead of a third constant. In the deployed contract, I would just swap out the sandwiched code with some other instructions.

address a = 0xa264...1220;

uint x = 1 + 1 + 1 + ... + 1;

address b = 0x6473...0033;

After some experimentation, I found it would be possible, but only if the optimizer was enabled. Otherwise, Solidity emits too much value cleanup code. This was acceptable, so I moved on to refining the code itself.

I would only be able to modify the code within the two addresses, but it would be weird to see a dangling address at the end, so I decided to use them in conditionals instead. I also had to justify the need for the second conditional, so I threw in a little score bonus in the end. I made the first conditional check that the tx.origin matched a hardcoded value to give people the initial impression that there was no point pursuing this code path any further.

if (tx.origin != 0x13378bd7CacfCAb2909Fa2646970667358221220) return true;

state.rand = 0x40;

state.location = 0x60;

if (msg.sender != 0x64736F6c6343A0FB380033c82951b4126BD95042) return true;

state.baseScore += 1500;

Now that the source code was all prepared, I had to write the actual backdoor. My backdoor would need to verify that the player triggered the exploit correctly, fail silently if they didn't, and award them a bonus if they did. I wanted to make sure the exploit couldn't be easily replayed, so I decided on simply requiring the player to sign their own address and to submit the signature in the transaction. For extra fun, I decided to require the signature to be located at offset 0x44 in the transaction data, where the ball would typically begin. This would require players to understand how ABI encoding works and to manually relocate the ball data elsewhere.

However, here I ran into a big problem: it's simply not possible to fit all of this logic into 31 bytes of hand-written assembly. Fortunately, after some consideration, I realized that I had another 31 bytes to play with. After all, the real embedded metadata contained another IPFS hash that Etherscan would also ignore.

After some code golfing, I arrived at a working backdoor. In the first IPFS hash, I would immediately pop off the address that just got pushed, then jump to to the second IPFS hash. There, I would hash the caller and partially set up the memory/stack for a call to ecrecover. Then I would jump back to the first IPFS hash where I finish setting up the stack and perform the call. Finally, I set the score multiplier to be equal to (msg.sender == ecrecover()) * 0x40 + 1, which meant that no additional branching was needed.

After code golfing the backdoor down to size, I tweeted out my Rinkeby address in order to get some testnet ETH from the faucet, and to drop a subtle hint to anyone watching Twitter that something might be coming. Then, I deployed the contract and verified it.

Now all that was left to do was wait for someone to discover the backdoor that was hiding in plain sight.

Disclaimer: This post is for general information purposes only. It does not constitute investment advice or a recommendation or solicitation to buy or sell any investment and should not be used in the evaluation of the merits of making any investment decision. It should not be relied upon for accounting, legal or tax advice or investment recommendations. This post reflects the current opinions of the authors and is not made on behalf of Paradigm or its affiliates and does not necessarily reflect the opinions of Paradigm, its affiliates or individuals associated with Paradigm. The opinions reflected herein are subject to change without being updated.